An Introduction to OpenFace for Head and Face Tracking

James Trujillo ( james.trujillo@donders.ru.nl )

Wim Pouw ( wim.pouw@donders.ru.nl )

18-11-2021

Info documents

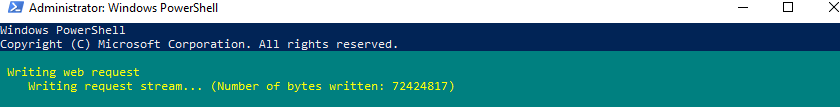

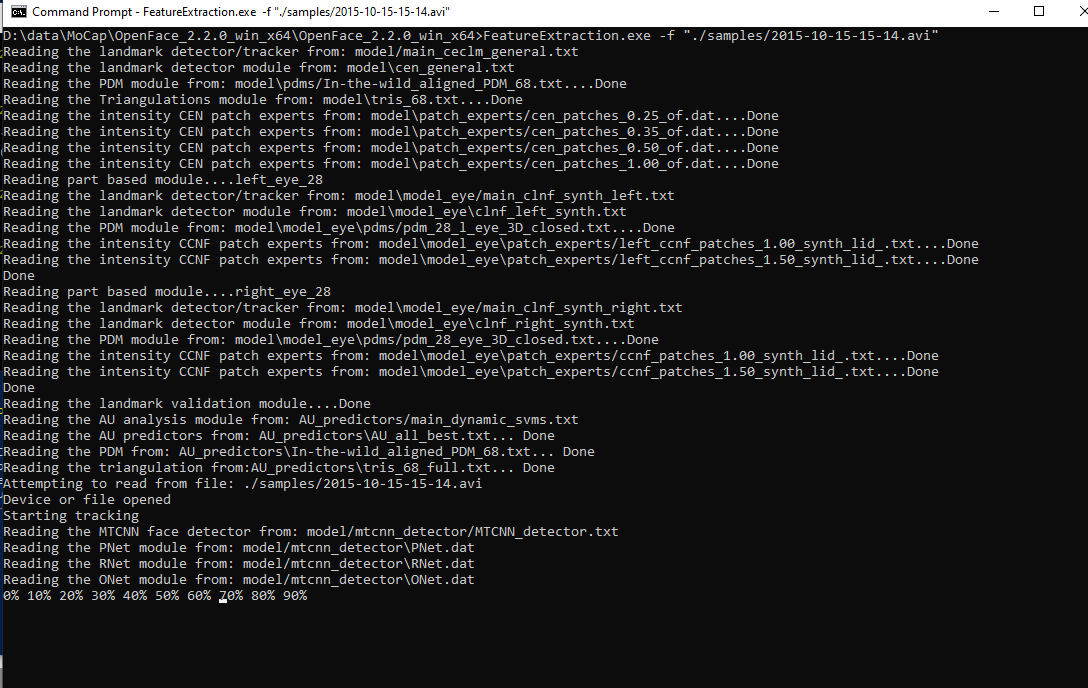

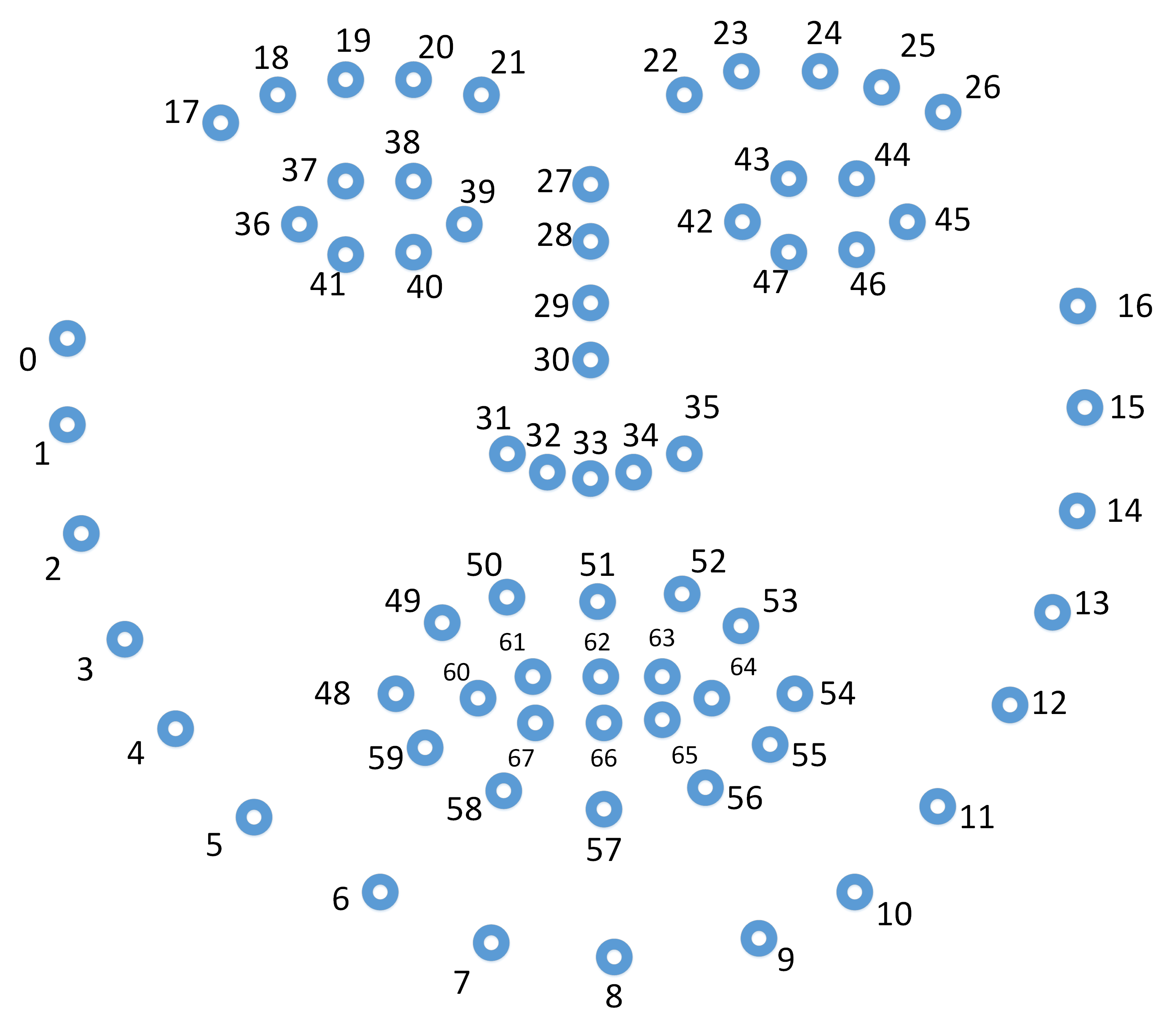

This Python coding module demonstrates how to use OpenFace, an open-source program that provides face and head tracking of images or videos. We'll go over basic installation, simple commands to run the tracking, and get a first look at the output data.- OpenFace: https://github.com/TadasBaltrusaitis/OpenFace

citation: OpenFace 2.0: Facial Behavior Analysis Toolkit Tadas Baltrušaitis, Amir Zadeh, Yao Chong Lim, and Louis-Philippe Morency, IEEE International Conference on Automatic Face and Gesture Recognition, 2018

Visual Studio download (VS required to run OpenFace via command line): https://visualstudio.microsoft.com/thank-you-downloading-visual-studio/?sku=Community&rel=17

A detailed tutorial for using ExploFace: https://github.com/emrecdem/exploface/blob/master/TUTORIALS/tutorial1.ipynb

location code: https://github.com/WimPouw/EnvisionBootcamp2021/tree/main/Python/FaceTracking_OpenFace

packages to download: exploface

citation: Trujillo, J.P. & Pouw, W.(2021-11-18). An Introduction to OpenFace for Head and Face Tracking [day you visited the site]. Retrieved from: https://wimpouw.github.io/EnvisionBootcamp2021/OpenFace_module.html

</center>

</center> </center>

</center> </center>

</center>

</center>

</center>